1. Field of the Invention

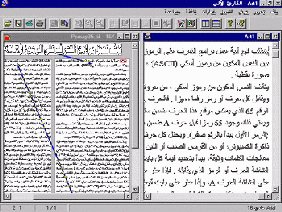

Welcome to Data ID's Site Map; Partner Program Application; Labels and Ribbons Media Specials Media Specials. OCR 6110A Reader 6500 Series OCR Scanner 5133A OCR Line Reader Caere OCR Products. TigerEYES Fixed Asset Manager© Gold Edition Pricing Information Fixed Asset Labels. Automatic Reader Optical Character Recognition (OCR) - Arabic and Multilingual OCR, Platinum Edition with SDK Kit vendor-unknown Sakhr’s Automatic Reader is the outcome of Sakhr ongoing research in the fields of Arabic Natural Language Processing and Character Recognition tec.

The present invention relates to optical character recognition (OCR), and more specifically to an automatic Arabic text image optical character recognition method that provides for the automatic character recognition of optical images of Arabic text, that is, word, sub-word, and character segmentation free.

2. Description of the Related Art

Arabic Text Recognition (ATR) has not been researched as thoroughly as Latin, Japanese, or Chinese. The lag of research on Arabic text recognition compared with other languages (e.g. Latin or Chinese) may be attributed to lack of adequate support in terms of journals, books etc. and lack of interaction between researchers in this field, lack of general supporting utilities like Arabic text databases, dictionaries, programming tools, and supporting staff, and late start of Arabic text recognition (first publication in 1975 compared with the 1940s in the case of Latin character recognition). Moreover, researchers may have shied away from investigating Arabic text due to the special characteristics of Arabic language.

Due to the advantages of Hidden Markov Models (HMM), researchers have used them for speech and text recognition. HMM offer several advantages. When utilizing HMM, there is no need for segmenting the Arabic text because the HMM's are resistant to noise, they can tolerate variations in writing, and the HMM tools are freely available.

Some researchers use HMM for handwriting word recognition, while others use HMM for text recognition. Moreover, it is well known that HMM has been used for off-line Arabic handwritten digit recognition and for character recognition. Additionally, it has been demonstrated that techniques that are based on extracting different types of features of each digit as a whole, not on the sliding window principle used by the majority of researchers using HMM, have to be preceded by a segmentation step, which is error-prone. Techniques using the sliding window principles and extracting different types of features are also well known. Such techniques have been used for online handwritten Persian characters and for handwritten Farsi (Arabic) word recognition.

Examples of existing methods include a database for Arabic handwritten text recognition, a database for Arabic handwritten checks, preprocessing methods, segmentation of Arabic text, utilization of different types of features, and use of multiple classifiers.

Thus, an automatic Arabic text image optical character recognition method solving the aforementioned problems is desired.

The automatic Arabic text image optical character recognition method includes training of the system to Arabic printed text and then using the produced models for classification of newly unseen Arabic scanned printed text, then generating the recognized Arabic textual information. Scanned images of printed Arabic text and copies of our minimal Arabic text are used to train the system. Each page is segmented into lines. The features of each line are extracted using the presented technique. These features are input to the Hidden Markov Model (HMM). The features of all training data are presented. HMM runs its training algorithms to produce the codebook and language models.

In the classification stage, a new Arabic printed text image in scanned form is input to the system. It passes through line segmentation where lines are extracted. In the feature stage, the features of these lines are extracted and input to the classification stage. In the classification stage, the recognized Arabic text is generated. This is the output of the system, which corresponds to scanned input image data.

The text produced is analyzed, and the recognition rates computed. The achieved high recognition rates indicate the effectiveness of the system's feature extraction technique and the usefulness of the minimal Arabic text and the statistical analysis of Arabic text that is implemented with the system.

These and other features of the present invention will become readily apparent upon further review of the following specification and drawings.

FIG. 1 is a block diagram illustrating the Automatic Arabic Text Recognition method according to the present invention.

FIG. 2 is a block diagram showing an isolated form of all basic letters of Arabic text.

FIG. 3 is a block diagram showing a subset of Arabic text characteristics that may be strongly related to character recognition in the method of the present invention.

FIG. 4 is a block diagram showing a 7-state HMM which indicates the transitions between states in the method of the present invention.

FIG. 5 is a block diagram showing Arabic writing line with stacked line segments, sliding window, and feature segments in the method of the present invention.

FIG. 6 is a block diagram showing the minimal Arabic script in the method of the present invention.

Similar reference characters denote corresponding features consistently throughout the attached drawings.

The automatic Arabic text image optical character recognition method, hereinafter referred to as the OCR method, has steps that may be implemented on a computer system. Such computer systems are well known by persons having ordinary skill in the art, and may comprise an optical scanner, a processor for executing software implementing the OCR method steps, and a display device for displaying the results.

FIG. 1 shows the OCR method steps 10. The OCR method 10 has two main phases, which include training of the system to Arabic printed text images by inputting images of printed Arabic text 40 and minimal Arabic text 42, and then using the produced models for classification of newly unseen scanned images of printed Arabic text. The corresponding recognized textual information is then generated. Scanned images of printed Arabic text and copies of minimal Arabic text are arranged in rows of text images 44, and then used to train an implementation system. Preprocessing 46 is performed, followed by a segmentation step 48 in which each page is segmented into lines. The features of each line are extracted at step 38. These features are input to the HMM at step 36. The features of all training data are presented. The HMM runs its training algorithms to produce codebook and language models, which are stored in a language model database 32. Moreover, the HMM used in the training exercise is stored in a HMM models database 30. A statistical and syntactical analysis on the text is performed at step 34.

In the classification stage, a new Arabic printed text image 12 in scanned form is input for text recognition using the OCR method 10. The text image 12 is row image formatted 14, preprocessed 16, and then passed through line segmentation 18, where lines are extracted. In the feature stage 20, the features of these lines are extracted and input to an optimized HMM 22, which is selected from the HMM models database 30 in the classification stage. In the classification stage, the corresponding recognized Arabic text is post-processed 26 and finally generated 28 on a display device. This is the output of the system that corresponds to the scanned input image data.

The text produced was analyzed, and the recognition rates computed. The achieved high recognition rates indicate the effectiveness of our feature extraction technique and the usefulness of the minimal Arabic text and the statistical analysis of Arabic text that is implemented with the system.

Arabic text is written from right to left. As shown in FIG. 2, the Arabic alphabet 200 has twenty-eight basic letters. An Arabic letter may have up to four basic different shapes, depending on the position of the letter in the word, i.e., whether it is a standalone, initial, terminal, or medial form. Letters of a word may overlap vertically with or without touching. Different Arabic letters have different sizes (height and width). Letters in a word can have short vowels (diacritics). These diacritics are written as strokes, placed either on top of or below the letters. A different diacritic on a letter may change the meaning of a word. Each diacritic has its own code as a separate letter when it is considered in a digital text. Readers of Arabic are accustomed to reading un-vocalized text by deducing the meaning from context.

As shown in FIG. 3, a subset of Arabic text characteristics 300 may be strongly related to character recognition. For example, a base line, overlapping letters, diacritics, and three shapes of the Lam character (terminal, medial, and initial) are characteristics that have been demonstrated to be related to character recognition tasks. Table I shows the basic Arabic letters with their categories.

| TABLE I | ||||||

| Basic Arabic Letters | ||||||

| No. | Standalone | Terminal | Medial | Initial | Shapes | Class |

| 1 | 1 | 1 | ||||

| 2 | 2 | 2 | ||||

| 3 | 2 | 2 | ||||

| 4 | 2 | 2 | ||||

| 5 | 2 | 2 | ||||

| 6 | 4 | 3 | ||||

| 7 | 2 | 2 | ||||

| 8 | 4 | 3 | ||||

| 9 | 2 | 2 | ||||

| 10 | 4 | 3 | ||||

| 11 | 4 | 3 | ||||

| 12 | 4 | 3 | ||||

| 13 | 4 | 3 | ||||

| 14 | 4 | 3 | ||||

| 15 | 2 | 2 | ||||

| 16 | 2 | 2 | ||||

| 17 | 2 | 2 | ||||

| 18 | 2 | 2 | ||||

| 19 | 4 | 3 | ||||

| 20 | 4 | 3 | ||||

| 21 | 4 | 3 | ||||

| 22 | 4 | 3 | ||||

| 23 | 4 | 3 | ||||

| 24 | 4 | 3 | ||||

| 25 | 4 | 3 | ||||

| 26 | 4 | 3 | ||||

| 27 | 4 | 3 | ||||

| 28 | 4 | 3 | ||||

| 29 | 4 | 3 | ||||

| 30 | 4 | 3 | ||||

| 31 | 4 | 3 | ||||

| 32 | 4 | 3 | ||||

| 33 | 4 | 3 | ||||

| 34 | 2 | 2 | ||||

| 35 | 2 | 2 | ||||

| 36 | 2 | 2 | ||||

| 37 | 2 | 2 | ||||

| 38 | 2 | 2 | ||||

| 39 | 2 | 2 | ||||

| 40 | 4 | 3 |

We group them into three different classes according to the number of shapes each letter takes. Class 1 consists of the single shape of the Hamza, which comes in standalone state only, as shown in row 1 of Table I. Hamza does not connect with any other letter.

The second class (medial category) presents the letters that can come either standalone or connected only from the right (Rows 2-5, 7, 9, 15-18, and 35-39 of Table I).

The third class (Class 3) consists of the letters that can be connected from either side or both sides, and can also appear as standalone (Rows 6, 8, 10-14, 19-33, and 40 of Table I).

In order to use Hidden Markov Models (HMM), it is known to compute the feature vectors as a function of an independent variable. This simulates the use of HMM in speech recognition, where sliding frames/windows are used. Using the sliding window technique bypasses the need for segmenting Arabic text. The same technique is applicable to other languages. We use a known HMM classifier. However, the OCR method 10 utilizes a customized set of HMM parameters. The OCR method 10 allows transition to the current, the next, and the following states only. This structure allows nonlinear variations in the horizontal position.

For example, the Hidden Markov Model (HMM) toolkit (HTK), available from the University of Cambridge, models the feature vector with a mixture of Gaussians. It uses the Viterbi algorithm in the recognition phase, which searches for the most likely sequence of a character given the input feature vector.

A left-to-right HMM for the OCR method 10 is implemented. As shown in FIG. 4, a 7-state HMM 400 is configured with a predetermined allowable state transition sequence. The 7-state HMM model 400 allows relatively large variations in the horizontal position of the Arabic text. The sequence of state transition in the training and testing of the model is related to observations of each text segment feature. In this work, we have experimented with using different numbers of states and dictionary sizes, and describe herein the best mode for performing the OCR method 10. Although each character model could have a different number of states, the OCR method 10 adopts the same number of states for all characters in a font. However, the number of states and dictionary sizes for each font, in relation to the best recognition rates for each font, may vary.

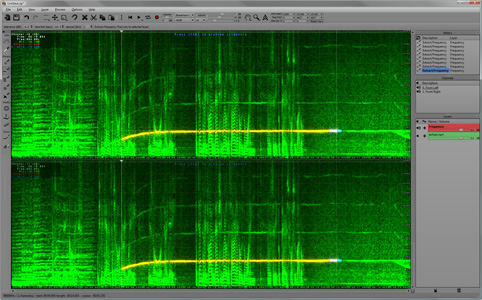

A technique based on the sliding window principle is implemented to extract Arabic text features. A window with variable width and height is used. Horizontal and vertical overlapping-windows are used.

In many experiments, we tried different values for the window width and height, vertical and horizontal overlapping. Then different types of windows were utilized to get more features of each vertical segment and to decide on the most proper window size and the number of overlapping cells, vertically and horizontally. The direction of the text line is considered as the feature extraction axis. In addition, different types of features were tested.

Based on the analysis of large corpora of Arabic text and a large number of experiments, the best Arabic text line model has been determined to have a stack of six horizontal line segments that split the Arabic text line vertically. The upper (top) and lower (bottom) segment heights are preferably approximately ¼ of the text line height. All other segments are preferably approximately ⅛ of the Arabic text line height. FIG. 5 illustrates a preferred hierarchical window structure 500 that results in the highest recognition rate. The sliding window is shown in two consecutive positions, s1 and s2. The window overlaps with the previous window by one pixel. Each Arabic text segment is represented by a 13-dimensional feature vector, as described below.

Starting from the first pixel of the text line image, a vertical segment of preferably three-pixels width and a text line of height (TLH) is taken. The windows of first hierarchical level are of different dimensions. The top and bottom windows are preferably 3-pixels wide and TLH/4 height, and the other four windows are preferably 3-pixels wide and TLH/8 height. The number of black pixels in the windows of the first level of the hierarchical structure is estimated. Six vertically non-overlapping windows are used to estimate the first six features (features 1 to 6). Three additional features (features 7 to 9) are estimated from three vertically non-overlapping windows of 3-pixels width and TLH/4 height (windows of the second level of the hierarchical structure). Then, an overlapping window with 3-pixels width and TLH/2 height (windows of the third level of the hierarchical structure) with an overlapping of TLH/4 is used to calculate three features (features 10 to 12). The last feature (feature 13) is found by estimating the number of black pixels in the whole vertical segment (the window of the fourth level of the hierarchical structure).

Hence, thirteen features are extracted for each horizontal slide. To calculate the following features, the vertical window is moved horizontally, keeping an overlap of one pixel. Thirteen features are extracted from each vertical strip and served as a feature vector in the training and/or testing processes.

It has to be noted that the window size and vertical and horizontal overlapping are made settable, and hence different features may be extracted using different window sizes and vertical and horizontal overlapping. The OCR method 10 extracts a small number of one type of feature (only thirteen features). Moreover, the OCR method 10 implements different sizes of windows, uses a hierarchical structure of windows for the same vertical strip, bypasses the need for segmentation of Arabic text, and applies to other languages with minimal changes to the basic method described herein. The thirteen features have been chosen after extensive experimental testing. Table II illustrates features and windows used in the feature extraction phase.

| TABLE II | ||||

| Features and Windows in Feature Extraction Phase | ||||

| Features | ||||

| Features | Features | F10 to | Features | Features |

| F13 | F12 | F11 | F7 to F9 | F1 to F6 |

| F13 = | F11 = | F9 = | F6 (sum of black pixels | |

| F10 + F11 | F4 + F9 | F5 + F6 | in 6th vertical rectangle) | |

| F12 = | F5 (sum of black pixels | |||

| F2 + F8 + | in 5th vertical rectangle) | |||

| F5 | F8 = | F4 (sum of black pixels | ||

| F3 + F4 | in 4th vertical rectangle) | |||

| F10 = | F3 (sum of black pixels | |||

| F7 + F3 | in 3rd vertical rectangle) | |||

| F7 = | F2 (sum of black pixels | |||

| F1 + F2 | in 2nd vertical | |||

| rectangle) | ||||

| F1 (sum of black pixels | ||||

| in 1st vertical rectangle) |

It should be understood that no general adequate database for Arabic text recognition is freely available for researchers. Hence different researchers of Arabic text recognition use different data. To support this work we addressed the data problem in several ways, described herein.

The OCR method 10 uses a script that consists of a minimum number of letters (using meaningful Arabic words) that cover all possible letter shapes. This may be used for preparing databases and benchmarks for Arabic optical character recognition. In our case, it was used to augment the training of HMM to make sure that at least a number of samples of each character shape are used. To generate this data set, a corpora consisting of Arabic text of two Arabic lexicons, two HADITH books, and a lexicon containing the meaning of Quran tokens in Arabic was used. The electronic versions of such books and other old Arabic classical books could be found from different sites on the Internet. In a test for training of a Hidden Markov Model using an arbitrary 2500 lines of Arabic text, some characters had as low as eight occurrences, while other characters had over one thousand occurrences. It was noticed that characters with low number of occurrences had low recognition rates, as expected, due to the lack of enough samples for training. These reasons are some of the motivations that encouraged us to look for a minimal Arabic script for use in Arabic computing research.

The OCR method 10 addresses this problem by using a minimal Arabic text dataset. On analysis of Table I, supra, it was clear that there are thirty-nine shapes of letters that might come at the end of a word (terminal form), and twenty-three shapes of letters that might come at the beginning of a word (initial form). Hence, there should be some repetition of the letter shapes that come at the beginning in order to include all the shapes of Arabic letters.

By using corpora of around 20 Megabytes of text and running our analysis and search modules, we obtained a nearly optimal script. The script was then further optimized manually in several iterations until reaching the text shown in FIG. 6. As shown in FIG. 6, initial letter shapes 600 have two occurrences each to compensate for the extra shapes of Arabic letter shapes that come at the end of a word (terminal). All other letter shapes are used only once. Hence, the text shown in FIG. 6 is the minimal possible text that covers all the shapes of Arabic alphabets. It is minimal in terms of the number of shapes used.

Selected Arabic printed text was used to generate synthesized images. The selected text was extracted from the books of Saheh Al-Bukhari and Saheh Muslem. The text of the books represents samples of standard classic Arabic. The extracted data comprises 2,766 lines of text, including words totaling 224,109 characters, including spaces. The average word length of the text is 3.93 characters. The length of the smallest line is forty-three characters. The longest line has eighty-nine characters. This data was used in features extraction, training, and testing of our technique. Images of the selected data were generated in eight fonts, viz., Arial, Tahoma, Akhbar, Thuluth, Naskh, Simplified Arabic, Andalus, and Traditional Arabic. Table III shows samples of these fonts.

| TABLE III | |

| Selected Font Samples | |

| Font Name | Sample |

| Arial | |

| Tahoma | |

| Akhbar | |

| Thuluth | |

| Naskh | |

| Simplified | |

| Arabic | |

| Andalus | |

| Traditional | |

| Arabic |

Selected Arabic text was printed on paper with different fonts and then scanned with 300 dots-per-inch resolution. This text was also used to train and test the OCR method 10.

In order to statistically analyze Arabic text, two Arabic books have been chosen. The chosen books of Saheh Al-Bukhari and Saheh Muslem represent classic Arabic. The results of the analysis are tables showing the frequencies of Arabic letters and syllables in Arabic. These results include frequency of each Arabic letter in each syllable, frequencies of bigrams (a letter and its following letter) in each syllable, percentage of usage of Arabic letters, and syllables. The total number of syllables in the analyzed text is 2,217,178, with 18,170 unique syllables. The total number of characters is 4,405,318. The text includes 1,095,274 words, with 50,367 unique words. Table IV shows the frequencies and lengths of syllables.

| TABLE IV | |

| Frequency and Lengths of Syllables | |

| 90% syllables | Length 3 or Less |

| 98% syllables | Length 4 or Less |

90% of the syllables have length of 3 or less, and 98% of syllables are of length 4 or less. There are only eight syllables of length 9. As the length of syllables decreases, the number of different syllables increases. These tables may be used by HMM to generate the probabilities of the Arabic letters, bi-grams, trigrams, etc., especially when the training data is limited.

In order to have enough samples of each class for each font, in the training phase, 2,500 lines were used for training, and the remaining 266 lines were used in testing. There is no overlap between training and testing samples. A file that contains the feature vectors of each line was prepared. The feature vector contains the thirteen features extracted for each vertical strip of the image of the text line by the method described earlier.

All feature vectors of the vertical strips of the line are concatenated to give the feature vectors of the text line. The group of the feature files of the first 2,500 lines represents the observation list for training. The group of the remaining 266 feature files represents the observation list for testing. Fifteen more lines of text were added to the training set to assure the inclusion of a sufficient number of all shapes of Arabic letters. These lines consist of five copies of the minimal Arabic script set. This normally improves the recognition rate of the letters that do not have enough samples in the training set. The recognition rates of the classification phase confirmed this claim.

A large number of trials were conducted to find the most suitable combinations of the number of suitable states and codebook sizes. Different combinations of the number of states and size of codebook were tested. We experimented with a number of states from 3 to 15, and with sizes of codebooks of 32, 64, 128, 192, 256, 320, 384, and 512. Table V shows the best combinations of number of states and size of codebook used for different fonts that give the best recognition rates for each font.

| TABLE V | ||

| States and Codebook Size Per Font | ||

| Font Name | Number of States | Codebook size |

| Arial | 5 | 256 |

| Tahoma | 7 | 128 |

| Akhbar | 5 | 256 |

| Thuluth | 7 | 128 |

| Naskh | 7 | 128 |

| Simplified Arabic | 7 | 128 |

| Traditional Arabic | 7 | 256 |

| Andalus | 7 | 256 |

The results of testing 266 lines are shown in Table VI, which is a summary of results per font type, with and without shape expansion.

| TABLE VI | ||||

| Testing of 266 Lines | ||||

| With Expanded shapes | With Collapsed shapes | |||

| % of | % of | % of | % of | |

| Text font | Correctness | Accuracy | Correctness | Accuracy |

| Arial | 99.89 | 99.85 | 99.94 | 99.90 |

| Tahoma | 99.80 | 99.57 | 99.92 | 99.68 |

| Akhbar | 99.33 | 99.25 | 99.43 | 99.34 |

| Thulth | 98.08 | 98.02 | 98.85 | 98.78 |

| Naskh | 98.12 | 98.02 | 98.19 | 98.09 |

| Simplified | 99.69 | 99.55 | 99.84 | 99.70 |

| Arabic | ||||

| Traditional | 98.85 | 98.81 | 98.87 | 98.83 |

| Arabic | ||||

| Andalus | 98.92 | 96.83 | 99.99 | 97.86 |

Table VI also indicates the effect of having a unique code for each shape of each character in the classification phase (Columns 2 & 3) and then combining the shapes of the same character into one code (Columns 4. & 5). In all cases there are improvements in both correctness and accuracy in combining the different shapes of the character after recognition into one code. The following two equations were used to calculate correctness and accuracy.

Table VII shows the classifications results for the Arial font. The correctness percentage was 99.94% and the accuracy percentage was 99.90%. Only four letters out of forty-three had some errors. The letter has been substituted by the letter four times out of 234 instances. The only difference between the two characters is the dot in the body of the letter The second error consists of two replacements of the letter by the letter out of 665 instances. The third error was substituting the ligature by a blank four times out of forty. The fourth error was substituting the ligature once by out of 491 times. Other than the substitutions, ten insertions were added (two of them were-blanks).

| TABLE VII | ||||||||||

| Classification Results for Arial Font | ||||||||||

| % | % | Error | ||||||||

| Let | Samples | Correct | Errors | Recognition | Error | Del | Ins | Correctness | Accuracy | Details |

| Sil | 532 | 532 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | |

| 83 | 83 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 10 | 8 | 2 | 80.0 | 20.0 | 0 | 0 | 80.0 | 80.0 | ||

| 484 | 484 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 14 | 14 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 157 | 157 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 43 | 43 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 2114 | 2114 | 0 | 100.0 | 0.0 | 0 | 1 | 100.0 | 100.0 | ||

| 409 | 409 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 234 | 234 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 420 | 420 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 124 | 124 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 170 | 170 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 234 | 230 | 4 | 98.3 | 1.7 | 0 | 0 | 98.3 | 98.3 | -4 | |

| 113 | 113 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 344 | 344 | 0 | 100.0 | 0.0 | 0 | 1 | 100.0 | 99.7 | ||

| 97 | 97 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 702 | 702 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 46 | 46 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 640 | 640 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 119 | 119 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 415 | 415 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 93 | 93 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 68 | 68 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 15 | 15 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 818 | 818 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 44 | 44 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 495 | 495 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 467 | 467 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 288 | 288 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 2136 | 2136 | 0 | 100.0 | 0.0 | 0 | 2 | 100.0 | 99.9 | ||

| 1005 | 1005 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 1023 | 1023 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 665 | 663 | 2 | 99.7 | 0.3 | 0 | 0 | 99.7 | 99.7 | -2 | |

| 937 | 937 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 5 | 5 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 40 | 36 | 4 | 90.0 | 10.0 | 0 | 0 | 90.0 | 90.0 | Blnk-4 | |

| 14 | 14 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 207 | 207 | 0 | 100.0 | 0.0 | 0 | 4 | 100.0 | 98.1 | ||

| 413 | 413 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| 1159 | 1159 | 0 | 100.0 | 0.0 | 0 | 0 | 100.0 | 100.0 | ||

| Blank | 4637 | 4637 | 0 | 100.0 | 0.0 | 0 | 2 | 100.0 | 100.0 | |

| 491 | 490 | 1 | 0 | 0 | 99.8 | 99.8 | -1 | |||

| Ins | 10 | Blnk-2 | ||||||||

| 4 2 | ||||||||||

| 1 1 | ||||||||||

| Total | 22524 | 22511 | 13 | 99.94 | 0.06 | 0 | 10 | 99.94 | 99.90 |

Table VIIIA summarizes the results of Arial, Tahoma, Akhbar, and Thuluth fonts. Table VIIIB summarizes the results of Naskh, Simplified Arabic, Andalus, and Traditional Arabic fonts. Arial font was included for comparison purposes. The tables show the average correctness and accuracy of all these fonts.

As the resultant confusion matrices are too large to display in row format (at least 126 rows×126 columns are needed), we summarize the confusion matrix in a more informative way by collapsing all different shapes of the same character into one entry and by listing error details for each character. This will actually be the result after converting the recognized text from the unique coding of each shape to the unique coding of each character (which is done by the contextual analysis module, a tool we built for this purpose).

| TABLE VIIIA | ||||||||

| Summary of Results for Selected Fonts | ||||||||

| Arial | Tahoma | Akhbar | Thuluth | |||||

| Let | Correctness | Accuracy | Correctness | Accuracy | Correctness | Accuracy | Correctness | Accuracy |

| S | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 100 | 100 | 100 | 98.8 | 96.3 | 96.3 | 100 | 97.4 | |

| 80 | 80 | 100 | 100 | 60 | 60 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 99.6 | 99.6 | 99.8 | 99.8 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 99.9 | 99.1 | 99 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 99.3 | 99.3 | 93.9 | 93.9 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 99.8 | 99.8 | 100 | 100 | 97.8 | 97.6 | |

| 100 | 100 | 100 | 100 | 98.4 | 92.6 | 100 | 100 | |

| 100 | 100 | 99.4 | 99.4 | 100 | 100 | 97.1 | 97.1 | |

| 98.3 | 98.3 | 94.4 | 94.4 | 100 | 100 | 82 | 82 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 98.2 | 98.2 | |

| 100 | 99.7 | 100 | 99.1 | 100 | 99.7 | 99.1 | 98.8 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 94.9 | 94.9 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 99.8 | 98.9 | 99.8 | 99.8 | |

| 100 | 100 | 100 | 100 | 99.2 | 99.2 | 99.2 | 99.2 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 99.8 | 99.8 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 98.9 | 98.9 | |

| 100 | 100 | 98.5 | 97.1 | 100 | 100 | 97.1 | 97.1 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 99.8 | 99.8 | 99.5 | 99.5 | |

| 100 | 100 | 100 | 100 | 97.7 | 97.7 | 97.7 | 97.7 | |

| 100 | 100 | 100 | 100 | 99.6 | 99.6 | 100 | 99.2 | |

| 100 | 100 | 100 | 100 | 98.9 | 98.9 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 99.9 | 100 | 97.9 | 98.1 | 97.9 | 99.8 | 99.7 | |

| 100 | 100 | 100 | 100 | 99.9 | 99.9 | 98.2 | 98.1 | |

| 100 | 100 | 100 | 100 | 99.4 | 99.3 | 99.1 | 99.1 | |

| 99.7 | 99.7 | 100 | 100 | 99.7 | 99.7 | 99.1 | 99.1 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 99.8 | 99.8 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 90 | 90 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 98.1 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 89.1 | 89.1 | |

| 100 | 100 | 100 | 100 | 98.4 | 98.4 | 99.7 | 99.7 | |

| B | 100 | 100 | 100 | 100 | 100 | 100 | 99.3 | 99.2 |

| 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | |

| T | 99.9 | 99.9 | 99.9 | 99.7 | 99.4 | 99.3 | 98.9 | 98.8 |

| TABLE VIIIB | ||||||||

| Summary of Results for Selected Fonts | ||||||||

| Naskh | Simplified Arabic | Traditional Arabic | Andalus | |||||

| Let | Correctness | Accuracy | Correctness | Accuracy | Correctness | Accuracy | Correctness | Accuracy |

| S | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 40 | 40 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 96.3 | 96.3 | 98.5 | 98.5 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 97.7 | 97.7 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.5 | 98.4 | 100 | 99.8 | 98.7 | 98.5 | 100 | 100 | |

| 89.3 | 88.4 | 100 | 100 | 92.5 | 92.5 | 100 | 99.8 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.8 | 98.8 | 99.8 | 99.8 | 99.1 | 99.1 | 100 | 100 | |

| 95.1 | 95.1 | 100 | 100 | 99.2 | 99.2 | 100 | 100 | |

| 96.5 | 96.5 | 100 | 100 | 91.8 | 91.8 | 100 | 100 | |

| 87.6 | 87.6 | 100 | 100 | 84.2 | 84.2 | 100 | 100 | |

| 90.3 | 89.4 | 100 | 100 | 98.2 | 97.4 | 100 | 100 | |

| 100 | 100 | 100 | 99.7 | 99.7 | 99.4 | 100 | 99.7 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.6 | 98.6 | 100 | 100 | 99.9 | 99.9 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 99.2 | 99.2 | 100 | 100 | 99.7 | 99.7 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.8 | 98.8 | 100 | 100 | 98.8 | 98.8 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 97.1 | 97.1 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.7 | 98.7 | 100 | 100 | 99.9 | 99.9 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 99.6 | 99.6 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 99.1 | 99.1 | 99.8 | 99.8 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 98.2 | 98.1 | 100 | 99.7 | 99.6 | 99.6 | 100 | 77.7 | |

| 90 | 89 | 100 | 100 | 94.1 | 94 | 100 | 100 | |

| 96.7 | 96.5 | 99.9 | 99.9 | 98.1 | 98 | 100 | 100 | |

| 99.7 | 99.7 | 100 | 100 | 99.1 | 99.1 | 100 | 100 | |

| 99.9 | 99.9 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 97.5 | 97.5 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 99 | 99 | 100 | 100 | 100 | 100 | 100 | 100 | |

| 99.5 | 99.5 | 98.6 | 98.6 | 98.6 | 98.6 | 100 | 100 | |

| 97.7 | 97.7 | 100 | 100 | 98.4 | 98.4 | 100 | 100 | |

| B | 99.4 | 99.4 | 100 | 99.6 | 99.9 | 99.9 | 99.9 | 99.9 |

| 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | 99.8 | 100 | 100 | |

| T | 98.2 | 98.1 | 99.8 | 99.7 | 98.9 | 98.8 | 100 | 97.9 |

In summary, our technique is based on a hierarchical sliding window technique with overlapping and non-overlapping windows. Based on Arabic text line analysis, we modeled Arabic text line into a stack of horizontal segments with different heights. Hence, the technique utilizes Arabic writing line structure to define the right segments to use. We represent each sliding strip by thirteen features from one type of simple feature for each sliding window. The OCR method 10 preferably includes the letters/ligatures and in their classifications. Each shape of an Arabic character is considered as a separate class. The number of classes became 126. The OCR method 10 does not require segmentation of Arabic text. Finally, the OCR method 10 is language independent. Hence, it may be used for the automatic recognition of other languages. We ran a successful proof of concept on English text and achieved similar recognition rates as the Arabic text without any modification to the structure or parameters of our model.

A number of applications can use the OCR method 10. The OCR method 10 can be used to convert scanned Arabic printed text to textual information, which results in savings in storage, and in the ability to search and index these documents. The OCR method 10 can be embedded in search engines, including Internet search engines, enabling it to search scanned Arabic text. The OCR method 10 can be integrated in office automation products and may be bundled with scanner devices for the Arabic market. The OCR method 10 can work for other languages, especially Urdu, Persian, and Ottoman, to name a few. The OCR method 10 may be integrated with an Arabic text-to-speech system for the blind. Hence, scanned Arabic text may be converted to text and then to speech for the blind.

It is to be understood that the present invention is not limited to the embodiment described above, but encompasses any and all embodiments within the scope of the following claims.

Ocr Reader Online

- Chen, Q.: Evaluation of OCR algorithms for images with different spatial resolutions and noises. Ph.D. thesis, University of Ottawa, Canada 2003. http://bohr.wlu.ca/hfan/cp467/08/nodes/master_thesis.pdf. Accessed Oct 2015

- Soudi, A.: Arabic computational morphology knowledge-based and empirical methods. Knowledge-Based and Empirical Methods, pp. 3–14. Springer, Amsterdam (2007)Google Scholar

- AbdelRaouf, A., Higgins, C.A., Pridmore, T., Khalil, M.: Building a multi-modal Arabic corpus (MMAC). IJDAR 13.4, 285–302 (2010), (Springer)Google Scholar

- AbdelRaouf, A.: Offline printed Arabic character recognition. Ph.D. thesis, The University of Nottingham for the Degree of Doctor of Philosophy, May 2012Google Scholar

- Jing, H., Lopresti, D., Shih, C.: Summarizing noisy documents. In: Proceedings of the Symposium on Document Image Understanding Technology, pp. 111–119. Apr 2003Google Scholar

- Kanungo, T., Marton, G. A., Bulbul, O.: Performance evaluation of two Arabic OCR products. In: The 27th AIPR workshop: Advances in computer-assisted recognition (pp. 76–83). International Society for Optics and Photonics (1999).Google Scholar

- Albidewi, A.: The use of object-oriented approach for Arabic documents recognition. Int. J. Comput. Sci. Netw. Secur. (IJCSNS) 8.4, 341 (2008)Google Scholar

- Nartker, T.A., Rice, S., Nagy, G.: Performance metrics for document understanding systems. In: Proceedings of the Second International Conference on Document Analysis and Recognition, pp. 424–427 (1993)Google Scholar

- Kjersten, B.: Arabic optical character recognition (OCR) evaluation in order to develop a post-OCR module. Computational and Information Sciences Directorate, ARL, Sept 2011Google Scholar

- Kamboj, P., Rani, V.: A brief study of various noise model and filtering techniques. J. Glob. Res. Comput. Sci. 4.4, 166–171 (2013)Google Scholar

- Saber, S., Ahmed, A., Hadhoud, M.: Robust metrics for evaluating Arabic OCR systems. In: IEEE IPAS’14, pp. 1–6. University of sfax, Tunisia, Nov 2014Google Scholar

Comments are closed.